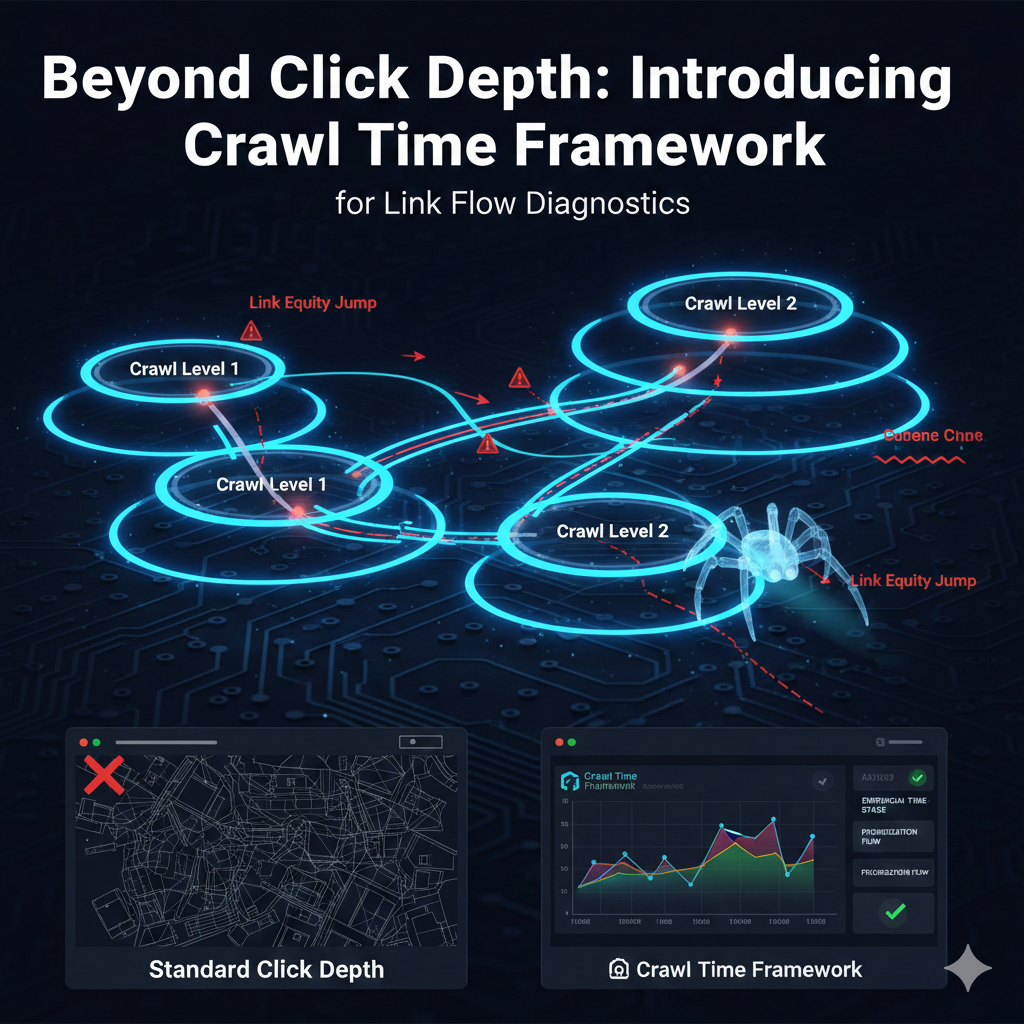

If you’re still relying on basic Click Depth or those clean, topological crawl maps, you’re missing the point. The fundamental issue is that neither can provide insights into crawler prioritization. They just show theoretical distance, which gives you zero visibility into the bot’s actual sequence.

This is the gap the Crawl Time Framework closes.

Marin Popov’s idea gives entirely new point of view of the Click Depth using link discovery time as a metric based on onsite factors only. It’s a clean diagnostic method. By assigning a distinct Crawl Level to every URL based on its timestamp in the log, we can finally stop guessing and start seeing the actual progression of the bot through the site.

What this reveals that your current toolset doesn’t:

- The Level: Not just a click count, but the empirical time-stage required for discovery. This is the real measure of a page’s discoverability.

- Link Equity Jumps: The framework directly flags those structural inefficiencies—pages being skipped or accessed at unexpected stages—that signal a failure in your internal link sequencing.

If your objective is improving link flow, crawl efficiency and discovery, this data is can give you valuable insights. Traditional methods simply can’t supply this level of empirical diagnostic detail.

Test the Framework Yourself

To prove the concept, an application was created using Python and Streamlit. It includes not only detailed info and descriptions but also example data. You have two options to try it yourself: upload files you already have from your preferred crawling tool or run a quick example using the application. This way, you can see the mechanism in action immediately:

➡️ Visit the Crawl Time Framework application here: https://crawl-time-framework.streamlit.app/

Full technical documentation

For the complete, detailed technical information on the idea, implementation, data structure, and advanced analysis of this new approach, the author’s full post can be seen here:

➡️ https://marinpopov.com/technical-seo/crawl-time-framework/